“Keepin' it fresh (and good)!” - Continuous Ingestion of OSM Data at Facebook

Christopher Klaiber and Kevin Ventullo

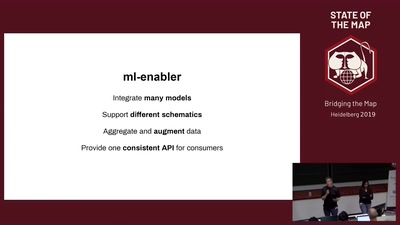

Building forward from our work presented last year at State of the Map, we have created a system to assist mappers via machine learned models. In addition, we have built an automated ingestion framework for OSM data at scale that allows us to selectively update parts of the map instead of doing a full snapshot change.

Building forward from our work on __Mobius Logical Changesets__ (presented last year at SotM US 2018), we have created an __automated ingestion and integrity framework__ for OSM data that allows us to __selectively__ update parts of the map instead of doing a full snapshot change all at once.

Decomposing a large set of changes in this way gives us the flexibility to __rapidly ingest__ our own additions to the map, focus on __geographical areas of importance__ to downstream products, and allows us to __quickly apply hotfixes__ whenever egregious problems do arise.

With millions of tiny changes happening every week, we have created a system that is built on __per-feature approval and preprocessing__, that allows us to ingest changes at scale, while creating rules to __automatically process logical changesets and enforce integrity constraints (e.g. anti-vandalism, anti-profanity etc.).__

Due to the contextual nature of some of the changes in OpenStreetMap, the system combines Human Approval, necessary for highly visible features such as names of large administrative areas, with __Automated AI/ML-based approval__: for example, using __computer vision techniques__ to reconcile newly created features against __satellite imagery ground truth__, or applying __NLP techniques__ to determine whether other user-visible string changes are sensible and valid. These components are combined to create a __continuous ingest-validate-deploy cycle__ for OSM map data.